You don't know what you don't know

Lucia Mormocea

Principal Software Developer, 成人论坛 Bitesize

Tagged with:

The story of how the Bitesize team created a quiz feature from their bedrooms, kitchens and garages.

It was the middle of summer 2020. After the whirldwind brought on by coronavirus, lockdown, working from home and not least Daily Lessons we were just settling into a comfortable routine.

And when I say ‘we’, I mean the lovely bunch of people that make up the Bitesize team these days: developers, testers, UX designer, business analysts, project and product managers.

Tickets were added in at a steady pace, features were coming along nicely. We even spent a couple of days in a hackathon devising new ideas for the website while shaking off some technical cobwebs.

Overall life working from home was as peachy as it could get in a global pandemic.

Maths, Science, Magic

We started looking ahead at what we could do to support our audiences in the new autumn term. Students faced interrupted lessons and a potential mix of in-school and at-home learning. We came up with a number of things we could offer to help. One of them was using quizzes to diagnose gaps in their knowledge and recommend where they should focus.

Quizzes are nothing new to Bitesize, we have two types of them already. One is the old favourite version made up of ten questions at the end of the revision chapters andother was a more elaborate learning experience powered by machine learning which aimed to help the user retain information.

This quiz was different!

The data science team had a prototype that used existing questions from test chapters and computed the user’s mastery level across each study guide with a certain level of confidence.

The more questions the user was asked, the higher the confidence level, and the more accurate the mastery level.

Based on those mastery levels we could suggest different topics or guides that the user should revise, effectively allowing us to diagnose a user’s gaps in knowledge.

“Trusssst in me…”(Kaa, the python — Jungle Book)

The data science team had written their prototype in Python. Within the Bitesize team we had never touched that particular gem of a programming language so we were very apprehensive at first.

However, Python is “The” language of choice for machine learning and data science in general, not least because two of the most powerful libraries for mathematical operations are using it: numpy and scipy.

Those two libraries featured heavily in the prototype presented to us, so it made perfect sense for us to try and use Python for the actual implementation. However it did mean we had to produce a whole new CI/CD pipeline for it to work.

It’s a long way to Live if you wanna rock ’n roll

Having a fantastically clever algorithm that can provide mastery and confidence scores is great!

Shaping it into a product that provides value to our users is a whole different proposition.

Some of the initial questions we had to answer were:

- How should we package algorithm in order to be hosted in our AWS VPC?

- What’s the algorithm’s domain and data contract?

- How do we explain the algorithm to our audiences? Understandibly, these days people can be very suspicious of software that uses data to provide information.

- And last but not least, what happens when there is a Live bug? Contrary to popular belief software will always have bugs. Our job is to ensure they are few and far between.

A few shaping and discovery sessions with the data services team led to refactoring the prototype to a new working model.

We started calling it the Efficient Learning algorithm or just “the algorithm”.

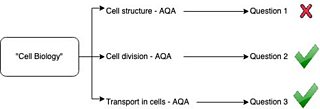

Its domain seemed to be made up of questions and answers. It required knowledge of Bitesize curriculum domain made up of subjects, topics and study guides. For example it needed to know that the topic Cell Biology contains guides like Cell Structure, Cell Division etc.

The results returned would be an array of guides with mastery and confidence scores for each one.

While our understanding of the Maths behind the scenes was not that great, hence the “Mystery Box”, we knew enough in order to get started on building the infrastructure for this and the UI.

To Lambda or not to Lambda

One of the early decisions made about the this new quiz was to host the algorithm as a lambda.

Some very good reasons for that were that the algorithm looked to be a data in/out function which did not need to store the results or any user data. Therefore, we did not need a permanent running EC2 for this. It could be packaged in a lambda that spun up, computed results, then shut down.

In addition, the “algorithm” did not need access to any of our systems except perhaps the curriculum data. Since the data required about subjects, topics, study guides was static we could just add it to one of our S3 buckets.

Lambdas are a lot easier to develop, wonderfully encapsulated, easy to spin up and tear down. Plus we already had a few kicking about our estate and we felt confident we could do this fast.

A Matter of State

Choosing to create a lambda implied that something else would have to handle the state. The “state” in our case consisted of a list of questions sent to the algorithm with a flag for whether the answer was right or wrong.

Initial UI drafts suggested that the quiz would run one question at a time and required the ability to store the user’s progress. We did not need any personal user data for this which suited us just fine because we wanted the quiz to be available to non-signed in users.

This is where User Store was the right solution for us. It is currently a client side module which handles CRUD operations for key-value data using the local storage API. The ambition for the future is to update the component to use a more permanent cloud storage solution whilst hiding the complexities away from the UI.

For the quizzes MVP we use it to store the questions which have already been answered. We could later extend this to securely store achievements and progress for signed in users.

UI and the MVP approach

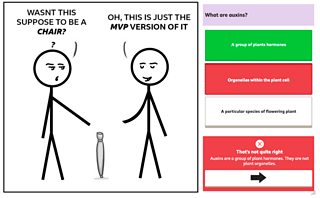

The MVP planning sessions with the teams are always fun!

They involve creative discussions about what the core functionality should be. Then we look at the time we have to build it and start trimming the edges.

The MVP approach allows the whole team to build this product as fast as possible, then learn what works for the audience, and what doesn’t.

UX designers also changed their approach. Instead of carrying on a big discovery phase with lots of user studies, they switched to a ‘build, measure, learn’ one.

The UI focused on getting the user to results as fast possible with minimal onboarding. It had to cater for questions and answers with very different text sizes.

It needed to be friendly to smaller devices since it was estimated that most students will be doing their studying on tablets or phones.

The UI also challenged us to think of the way we can display results meaningful to a user.

Mastery scores are not extremely useful. We needed a system that can explain how well a student has done for each topic they chose.

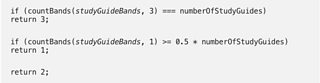

In order to help with this, the data science team expanded the algorithm to return band 1, 2 or 3 for each study guide with band 3 being the highest mastery level.

To simplify results even further, we created band 1, 2 and 3 for the whole topic based on the scores of each study guide.

The bands map to a colour system of Red, Orange, Green:

The most useful part for the user is the study suggestions provided for each topic. For Red and Orange bands we display links to the study guides with the lowest mastery scores. This should direct our users to content that is highly relevant to their revision goals.

Infrastructure

Getting a Python lambda off the ground was not trivial!

At first we had to setup a local development environment to run the function locally. We used Python 3 virtualenv and AWS SAM which enabled us to test the function with different types of events.

Our CI/CD pipelines are mostly done via Jenkins and Cosmos. Cosmos is an internal tool that provides a GUI for managing CloudFormation stacks. We created a pipeline that spun up a Docker container from a Python 3 image. The pipeline copied the code, ran the unit tests and packaged the build and dependencies into a zip file. From there it was a simple step to publish it to our AWS VPC.

Cosmos uses AWS CLI behind the scenes, so the size of the package when uploading a lambda is set to no more than 50 MB. However, when creating the package we quickly discovered that numpy and scipy libraries in the dependencies pushed the size of the package over that threshold.

This is where AWS Layers were helpful. The Layers functionality in AWS allowed us to move these two runtime dependencies outside the function. AWS offers an out of the box layer for the two libraries so we did not need to create one ourselves.

Automate all the things!

Between our development teams, our testers are spread thinner than marmite on toast. So our plans to secretly make them GCSE experts by getting them to manually test the quiz over and over again seemed unlikely.

We came to the conclusion that we had created a very challenging system to test.

The algorithm was not deterministic. It would not be possible to force it to provide the same questions with the same input. Luckily it provided the same mastery results if the same questions were used.

The UI was intricate, worked fully client side and the quiz was 20 questions long. So manual testing would be long, boring and repetitive. Exactly the opposite of what we want our testers to do!

The test team built two sets of automated tests in Cypress. One set runs contract tests against the responses coming from the API Gateway. The other runs an end to end test of the UI and can run through the whole quiz interface as many times as it is needed.

Creating and updating automated tests has become part of the regular development process and as a result it has given us a lot of confidence in our features over the past year. Automated tests run across the whole estate on an hourly basis and flag breaking changes.

This means we can deploy to Live faster, catch problems early and we are not restricted by the number of testers in our team.

You Don’t Know What You Don’t Know … Until you know it!

Hopefully Bitesize quizzes will help you figure out what you don’t know. Well, at least as far as GCSE’s go.

We had a great time making this and look forward to adding more subjects and introducing new types of questions.